NeuralSound: Learning-based Modal Sound Synthesis with Acoustic Transfer

SIGGRAPH 2022

1Peking University

2University of Maryland

Abstract

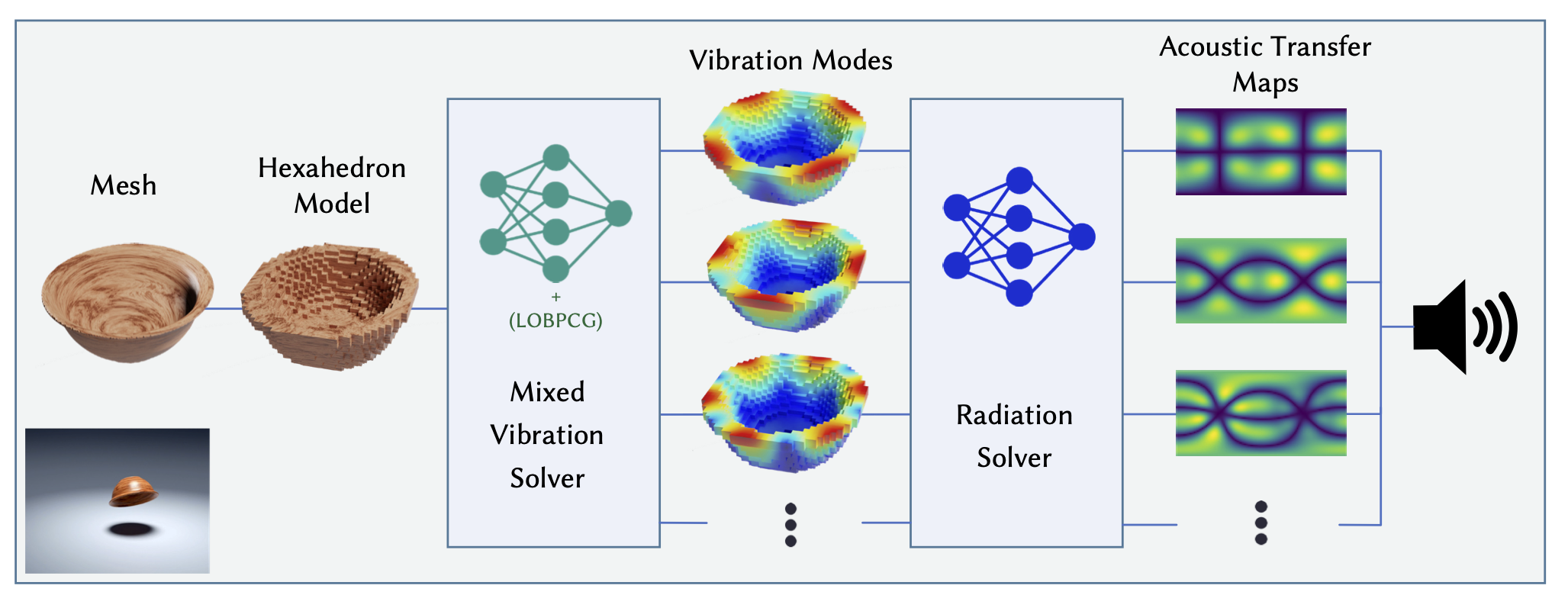

We present a novel learning-based modal sound synthesis approach that includes a mixed vibration solver for modal analysis and a radiation network for acoustic transfer. Our mixed vibration solver consists of a 3D sparse convolution network and a Locally Optimal Block Preconditioned Conjugate Gradient (LOBPCG) module for iterative optimization. Moreover, we highlight the correlation between a standard numerical vibration solver and our network architecture. Our radiation network predicts the Far-Field Acoustic Transfer maps (FFAT Maps) from the surface vibration of the object. The overall running time of our learning-based approach for most new objects is less than one second on a RTX 3080 Ti GPU while maintaining a high sound quality close to the ground truth solved by standard numerical methods. We also evaluate the numerical and perceptual accuracy of our approach on different objects with various shapes and materials.

Bibtex

@inproceedings{jin2022neuralsound,

title={NeuralSound: Learning-based Modal Sound Synthesis with Acoustic Transfer},

author={Jin, Xutong and Li, Sheng and Wang, Guoping and Manocha, Dinesh},

url = {https://doi.org/10.1145/3528223.3530184},

journal = {ACM Transactions on Graphics (SIGGRAPH 2022)},

year={2022}

}